Neural Network Nightmare: Finding Privacy Leaks in Image Recognition APIs

A defensive, technical walkthrough of privacy and model-extraction issues in image-recognition APIs - discovery, attacks (metadata leakage, facial embeddings, model inversion, adversarial), PoCs, and concrete mitigations.

Disclaimer (educational & defensive only)

The techniques in this post explain vulnerabilities and mitigations for image-processing APIs. Use this information only on systems you own or where you have explicit written permission. Do not run offensive tests against third-party systems without authorization.

Modern computer-vision APIs are powerful: they can classify scenes, extract text, detect faces, and summarize complex visual data. That capability is also a liability when misconfigured. This article synthesizes an anonymized research write-up and expands it into a practical guide for engineers, red teams, and privacy reviewers. It shows how seemingly harmless features leak sensitive data - from EXIF/GPS metadata to facial embeddings and even reconstructable training data - and gives hands-on PoCs and hardening steps.

Key takeaways up front:

- Vision APIs can leak personal data (IDs, addresses, GPS) when they extract metadata or OCR documents.

- Facial recognition endpoints that return embeddings or identifiers create a long-term privacy risk.

- Model-inversion (and membership inference) can reveal training data and enable cloning of proprietary models.

- Poorly rate-limited APIs allow large-scale extraction and model theft.

- Defenses require careful API design, input/output minimization, and monitoring.

1) Where the risk starts: discovery & reconnaissance

An assessment begins with simple discovery: enumerating likely endpoints and calling them with benign test images. Common patterns:

/api/v1/analyze,/api/v1/detect,/api/image/classify/api/v1/faces/recognize,/api/biometric/process/api/vision/process,/api/object/detect

A quick reconnaissance script will test these standard paths and log any 200 responses and the shape of returned JSON.

Example - discovery probe (defensive PoC)

Run such probes only on systems in-scope.

2) EXIF & metadata leakage: the silent privacy hopper

Images captured by phones often contain EXIF data (camera model, GPS, timestamps). If an API extracts and returns metadata verbatim, clients can leak location or device information.

Attack pattern:

- Upload an image containing GPS EXIF.

- Request

detailed_analysis=Trueor similar. - Observe returned JSON for

gps,exif.camera, orlocation.

Why it's bad: GPS coordinates reveal a user's precise location. Device model or serials may leak corporate or personal device identifiers.

Defensive checklist:

- Strip EXIF and other metadata server-side before analysis unless explicitly needed.

- If metadata must be accessible, return only coarse location (city-level) and always obtain user consent.

- Log and alert on requests that contain EXIF with precise coordinates.

3) OCR and document extraction: PII bleeding out of images

Vision APIs often offer OCR and structured-document extraction. These features can return passport numbers, SSNs, or account numbers when a user uploads documents.

Key controls:

- Use data-minimization: return only redacted text or safe tokens.

- Add sensitivity detectors that flag and block returning high-risk fields (like national IDs) unless the caller proves justification.

- Provide redaction options and require strong auth for returning raw OCR.

4) Facial recognition & embeddings - a long-term privacy trap

A critical finding in many reviews is when APIs return facial embeddings or any stable facial identifier. Embeddings are numeric vectors; while not human-readable, they allow deterministic matching, cross-referencing, and long-term tracking.

Risks:

- Cross-usage: embeddings can be used to match faces across datasets, enabling cross-site tracking and re-identification.

- Exfiltration: if an attacker can retrieve embeddings at scale, they can reconstruct or link identities.

- Unauthorized matches: returning

face_idormatched_personwithout consent exposes private identity linkage.

Safe alternatives:

- Never return raw embeddings. If matching is needed, perform server-side comparisons and return only boolean or ephemeral tokens.

- Limit retention of any face descriptors and rotate any matching tokens frequently.

- Require explicit consent and provide opt-out controls.

5) Model inversion & membership inference - how training data leaks

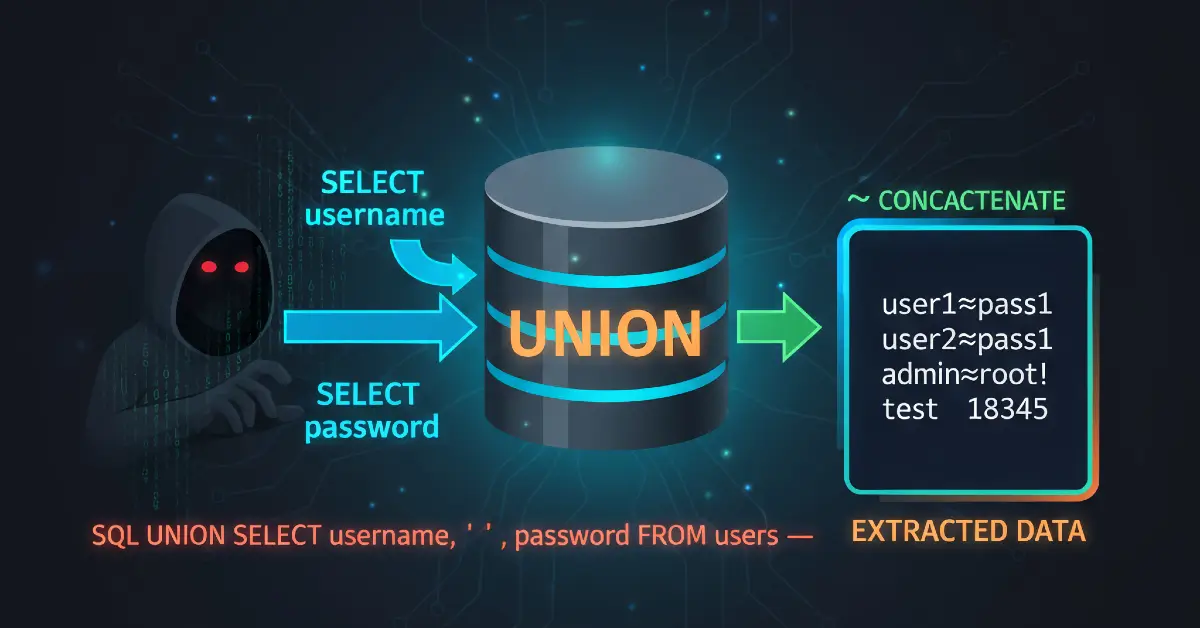

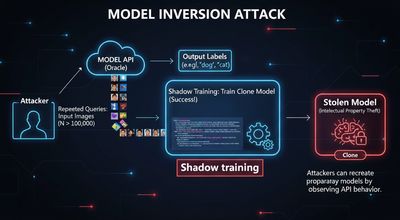

Model inversion / membership inference attacks are advanced, but feasible:

- Membership inference: an adversary queries a model with a candidate image and, from very high confidence scores or consistent outputs, infers that a particular sample was in the training set.

- Model inversion / extraction: with enough queries and returned probabilities, an adversary can reconstruct representative images or train a clone (shadow) model that approximates the API model.

Why it happens: models that are overfit or that return fine-grained scores (full probability vectors) leak more information.

Practical PoC pattern:

- Query the model with many inputs and record confidence vectors.

- For membership: repeatedly query target images; an unusually high, consistent confidence implies membership.

- For extraction: build a dataset from input→output pairs and train a local model; compare accuracy to the original.

Example - membership inference probe (defensive PoC)

If avg_conf is consistently very high (>0.95) across repeated queries, flag for potential membership leakage.

Mitigations:

- Reduce output granularity: avoid returning full probability vectors; return top-k labels without scores, or return coarse confidence buckets.

- Add prediction noise (differential privacy) for public APIs.

- Rate-limit and monitor unusual query patterns indicative of extraction attempts.

6) Adversarial examples & model reliability

Adversarial attacks show models can be tricked into misclassification with tiny perturbations. Attackers can use this to bypass content filters or create poisoning chains.

Defenses:

- Apply adversarial-training or use input sanitizers.

- Detect and block inputs with abnormal gradients or perturbation patterns.

- Use ensembles and secondary checks for high-confidence but unexpected classifications.

7) Practical, end-to-end PoC (lab-safe)

Below is a compact assessment framework (lab-only) combining discovery, metadata tests, facial checks, and membership probes. Use it on your own endpoints to validate defenses.

Run only in permitted environments.

8) Logging, monitoring, and anomaly detection

Because many attacks rely on volume, effective logging and telemetry are critical:

- Log every request with a hashed client identifier, timestamp, and requested flags (e.g.,

return_embeddings=True). - Alert on high query rates from the same client to sensitive endpoints.

- Monitor for repeated maximal-confidence answers (possible membership inference).

- Block or challenge (CAPTCHA / throttling) clients that enumerate images aggressively.

9) Product & privacy controls (policy side)

- Explicit consent flows for facial processing.

- Retention and deletion policies for embeddings, OCR outputs, and metadata.

- Data access reviews and periodic audits of endpoints returning sensitive fields.

- Provide SDKs with safe-by-default options (redaction on by default, embeddings disabled).

10) Responsible disclosure & triage guide

If you find a vulnerable vision API:

- Stop active extraction and preserve minimal logs for triage.

- Create a short report: endpoint, minimal repro with synthetic inputs, observed sensitive field names in response.

- Share privately with the vendor security contact or bug-bounty program.

- Avoid publishing PoCs that enable mass extraction until the vendor patches.

Vendors should triage quickly: if embeddings or PII are returned, treat as high-severity and rate-limit/block public access while investigating.

Quick mitigation checklist

| Vulnerability | Immediate mitigation | Long-term fix |

|---|---|---|

| EXIF/GPS leakage | Strip EXIF server-side | Optionally allow coarse location + consent |

| OCR returns PII | Redact high-risk patterns by default | Use sensitive-data classifiers & policy |

| Embeddings exposed | Disable embeddings in public APIs | Server-side matching, ephemeral tokens only |

| Full probability vectors | Return labels only or coarse buckets | Apply DP/noise to predictions |

| Rate-limit bypass | Aggressive rate-limits + CAPTCHA | Per-client quotas, anomaly detection |

| Model inversion risk | Throttle / deny bulk queries | Use differential privacy & monitor extraction indicators |

Final thoughts

Vision APIs enable impressive functionality, but they also change the data-sensitivity profile of images. A single endpoint returning detailed metadata, embeddings, or full confidence vectors can turn into a privacy-exfiltration vector or a model-theft pipeline.

Engineers should design image-processing APIs with the same secrecy-first mindset used for other sensitive services: minimize outputs, require explicit consent for biometric features, implement robust rate-limiting and monitoring, and treat embeddings and probability vectors as highly sensitive artifacts.

Stay safe, and remember: every image can be a data source - treat it accordingly. 🚨