The AI Eavesdropper: How Voice Assistants Were Secretly Recording Conversations

A defensive, technical write-up of a real-world voice-AI finding: discovery of voice endpoints, audio interception, command injection, privacy leakage and mitigations.

Disclaimer (educational & defensive only)

This post shares a summarized, anonymized report of another researcher's responsible disclosure. It explains attack techniques and defensive measures so engineers can fix issues. Do not test or exploit production systems without written authorization.

Voice assistants are everywhere: phones, smart speakers, TVs, and home hubs. They promise convenience, but when their APIs and streaming endpoints are exposed or misconfigured, the consequences are severe - real-time audio interception, command injection, privacy leakage, and persistent surveillance.

This write-up condenses a public, responsibly disclosed finding (anonymized) into a practical, defensible guide. It covers discovery patterns, proof-of-concept techniques (lab safe), the likely root causes, impact analysis, and an engineer-facing remediation checklist. The goal: empower defenders to audit and harden voice AI systems.

Summary of the finding (what happened)

A security researcher discovered exposed or poorly protected voice-processing endpoints on a smart-home vendor. By probing the API, the researcher found:

- unauthenticated or weakly authenticated STT/voice processing endpoints (

/speech-to-text,/voice/process), - streaming endpoints that accepted WebSocket or HTTP chunked data for real-time sessions,

- parameters that allowed

return_raworreturn_transcriptflags exposing full audio or transcripts, - weak access controls and lack of proper session binding, enabling session hijacking and continuous listening,

- support for metadata flags that could be abused to request debug outputs or raw audio dumps,

- permissive behavior that accepted "command" metadata - facilitating command injection via embedded or steganographic audio.

Using a controlled lab, the researcher showed how these weaknesses could be combined into an end-to-end attack chain that intercepts live audio, injects commands, and exfiltrates transcripts to an external server.

Why this is serious

Voice data is highly sensitive:

- audio contains PII (names, phone numbers, addresses),

- transcriptions reveal private conversations, credentials, and payment details,

- voiceprints and biometrics can be used to impersonate users or bypass voice authentication,

- continuous listening can capture background meetings, private discussions, and business secrets.

A misconfigured voice pipeline transforms smart devices into surveillance vectors. Because voice APIs often store or forward data to cloud services, a single misconfiguration can affect thousands of users.

How the researcher discovered the issue (recon & probes)

The discovery followed standard recon patterns adapted for voice APIs:

- Mass endpoint enumeration

Produce a list of likely voice endpoints (e.g.,/api/v1/speech-to-text,/api/v1/audio/transcribe,/api/assistant/voice,/api/v1/conversation/stream) and probe them with safe POSTs. - Test with small audio payload

Send short, innocuous audio (1s) encoded as base64 and observe responses (status codes, returned fields, headers). Successful 200 responses indicate an active receiver. - Check for streaming

Attempt WebSocket or chunked HTTP connections to detect real-time streaming endpoints such as/conversation/streamor/voice/live. - Inspect request/response metadata

Look for parameters likereturn_raw_data,include_metadata,process_commands,session_id, or debug flags that could change behavior. - Probe authentication

Check whether endpoints require Bearer tokens, API keys, device tokens, or allow unauthenticated requests from the public internet.

The researcher automated these steps in a safe reconnaissance script to identify candidate endpoints for deeper analysis in an isolated lab.

Reproducing the behavior (lab-safe PoC)

Below is a lab-only, non-destructive style PoC that shows how to probe an endpoint for basic audio handling and whether it echoes back raw data or transcript fields. Do not run against third-party infrastructure.

If the response contains raw_audio, raw_chunks, return_raw_data:true, or long transcript fields, treat it as a red flag and limit testing to a controlled environment.

Attack vectors found and how they chain

The researcher demonstrated several attack patterns - each dangerous on its own but far worse when chained.

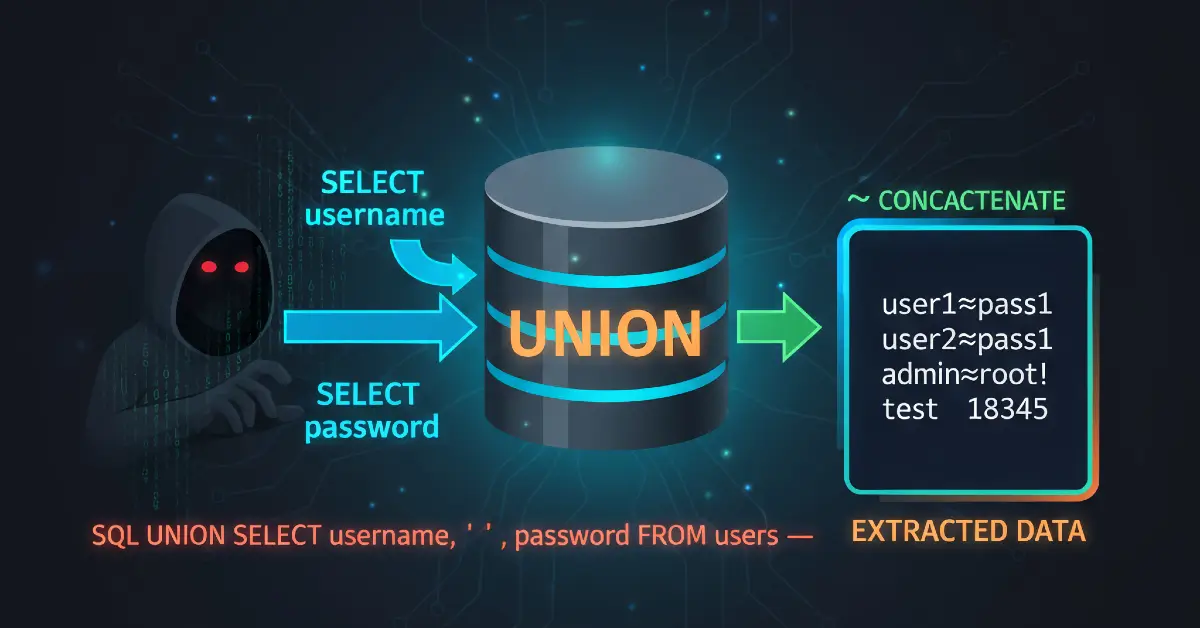

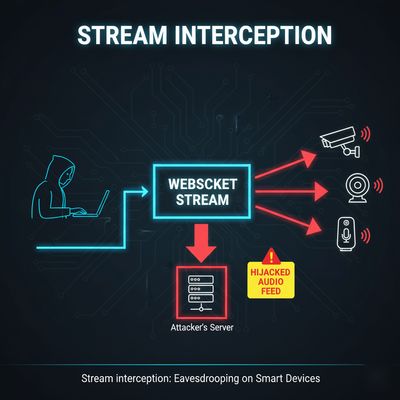

1. Real-time stream interception

If an endpoint accepts a start_stream request with insufficient session binding, an attacker can open a WebSocket and request continuous audio. Without strict origin and token checks, an attacker can receive chunks of users' live conversations.

2. Session hijacking

Long-lived session IDs or weak device tokens enable replays or session takeover. If an attacker obtains or guesses a session_id, they can connect to the same stream endpoint and receive audio.

3. Data exfiltration via debug flags

Some endpoints support return_raw_data or debug=true. If these flags can be set by request or metadata, they can cause the server to return full audio or raw transcripts that are then exfiltrated.

4. Command injection via metadata or steganography

The researcher found that some voice pipelines accept metadata fields (e.g., {"process_commands":true}). If the backend treats metadata as control input without validation, an attacker can combine that with steganographic or ultrasonic audio to trigger unintended behavior.

5. Ultrasonic / frequency attacks

Embedding inaudible high-frequency signals (near ultrasonic) can sometimes be recognized by voice-processing models but not by humans, enabling hidden commands. This requires carefully crafted audio but is a known vector.

6. Continuous monitoring + analysis

Once audio is intercepted, an attacker can stream it to an analysis pipeline (speech-to-text + NLP) to extract PII, credit card numbers, or security tokens.

Example PoC: safe simulation of session hijack (lab)

This simulated PoC shows the concept of connecting to a streaming endpoint with a session_id. Replace with a localhost test harness only.

Impact assessment

Potential adversary capabilities if these weaknesses are present:

- Real-time eavesdropping of private conversations, meetings, and calls.

- Exfiltration of credentials spoken aloud (passwords, OTPs, account details).

- Biometric theft: voiceprints and speaker embeddings usable in other attacks.

- Unauthorized command execution on integrated smart home devices (unlock doors, disable alarms).

- Large-scale privacy breach because voice platforms often serve many users.

- Legal & compliance fallout: recording people without consent, violating GDPR/CCPA.

The severity is high when endpoints are internet-facing and lack proper authentication and logging.

Root causes (why the system failed)

Common engineering mistakes that enable these attacks:

- Secrets and debug flags present in public API parameters.

- Weak or absent authentication for streaming endpoints.

- Long, guessable session IDs and no binding to device identity.

- Excessive debug/return options that leak raw audio or transcripts.

- Blind trust of

metadatafields without validation. - No rate limiting or anomaly detection on streaming or transcription requests.

- Lack of separation between diagnostic/debugging interfaces and production APIs.

Remediation checklist - practical fixes for engineering teams

-

Authentication & session binding

- Require strong auth (mutual TLS, scoped tokens) for any streaming endpoint.

- Bind sessions to device/client identity (certificate or hardware token).

- Use short-lived session tokens and rotate them frequently.

-

Remove debug paths from production

- Disable

return_raw_data,debug, orreturn_rawflags in production. - Move debug endpoints to isolated admin networks requiring multi-factor auth.

- Disable

-

Strict parameter validation

- Reject unknown metadata keys.

- Ignore or sanitize flags like

process_commandsunless explicitly authorized.

-

Function & action authorization

- Do not execute arbitrary commands based on user input or metadata.

- Map allowed functions server-side; apply RBAC and per-call authorization checks.

-

Rate limiting & anomaly detection

- Limit streaming initiation per device/account and flag long-lived or repeated stream startups.

- Detect unusual patterns (e.g., frequent

return_raw_datarequests).

-

Transport & storage protections

- Encrypt audio-in-transit (TLS) and at-rest.

- Limit storage retention; redact transcripts containing potential secrets before storage.

- Mask or redact PII in transcriptions automatically.

-

Input sanitization & content filtering

- Apply detection for common secret patterns (API keys, tokens) in both inputs and outputs.

- Prevent untrusted user content from being used in system prompts or model training.

-

Logging, alerting & forensics

- Log stream starts/stops, token usage, and function call attempts.

- Monitor for abnormal token reuse or cross-IP session activity.

-

User consent & privacy controls

- Explicit user consent UI for continuous recording.

- Clear indicators (lights/notifications) when streaming or recording is active.

-

Secure defaults & separation

- Ship with debug disabled; make enabling debug an explicit dev operation.

- Isolate developer tooling from production voice endpoints.

Responsible disclosure tips (for researchers)

If you discover an issue:

- Stop active probing when you confirm a vulnerability.

- Capture minimal evidence (sanitized screenshots, request/response headers).

- Contact the vendor via their security contact or bug bounty program.

- Provide a reproducible, lab-safe test case.

- If the vendor confirms and patches, coordinate disclosure timing.

The researcher followed these steps and achieved responsible resolution.

Final notes

Voice interfaces are a unique, high-value attack surface. They combine sensory input, model inference, and often direct control over physical devices. As voice assistants proliferate, secure-by-default design is essential: lock down streaming endpoints, remove debug fences, implement strict auth, and assume user input is adversarial.

Stay vigilant - voice data is private by nature; secure it accordingly.