Can AI Defend Us Against Hackers? A Pentester Reality Check

AI is transforming cybersecurity—but can it truly outsmart human hackers? A detailed, expert-level breakdown from a pentester’s perspective.

Disclaimer: This post is for educational and defensive purposes. It is written from a professional pentester’s perspective to analyze how AI is transforming both cyber offense and defense, and what this means for the future of ethical hacking.

The Hype vs. The Reality: Can AI Truly Stop Hackers?

Everywhere you look, AI is being hailed as the silver bullet of cybersecurity.

Vendors market “AI-powered” threat detection tools that claim to stop zero-days before they exist.

Media headlines scream, “AI fights hackers faster than ever before!”

But here’s the reality from someone who’s been on both sides of the terminal - as a pentester, I’ve watched AI struggle against real-world attack creativity.

AI can spot unusual patterns, correlate billions of logs, and flag anomalies faster than any human. Yet, when faced with improvisation, context, or intent, it falters.

So let’s strip away the hype and analyze what’s really happening.

Can AI defend us against hackers - or is it just another tool in a much larger chess game?

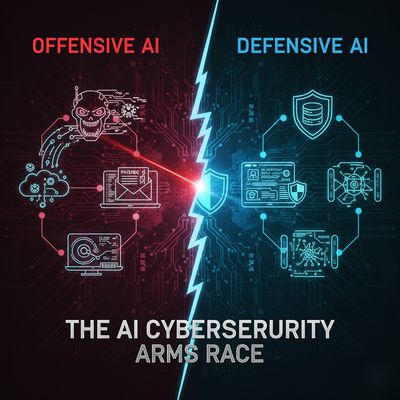

Section 1 - The Offensive AI: When Hackers Teach Machines to Hack

AI is not just a defender’s toy anymore.

Attackers have started weaponizing it - creating, automating, and evolving attacks that traditional systems simply can’t keep up with.

🧬 1. Polymorphic Malware

Machine learning has enabled the creation of malware that rewrites itself every time it runs.

Traditional antivirus relies on signatures - static identifiers like file hashes or byte patterns. AI-powered malware, however, uses generative algorithms to mutate its structure, evading these detections entirely.

Example:

A hacker trains an LLM (Large Language Model) to re-encode payloads or rephrase PowerShell commands every few minutes.

Each version looks unique but performs the same malicious task - stealing data, establishing persistence, or exfiltrating tokens.

This is offensive AI in motion - malware that learns from the defender’s responses.

🎯 2. Automated Phishing at Scale

In the past, phishing campaigns required creativity and time.

Now, AI can craft thousands of personalized phishing emails - each tuned to the victim’s language, tone, and context.

Imagine a GPT-style model that reads a company’s public emails and LinkedIn posts, then mimics internal communication perfectly.

These aren’t “Nigerian prince” scams anymore - they’re linguistically flawless social-engineering payloads.

AI even helps automate domain generation and mimic trusted templates, drastically improving click-through rates.

For defenders, it’s a nightmare: the attacks look human because they are machine-generated to sound human.

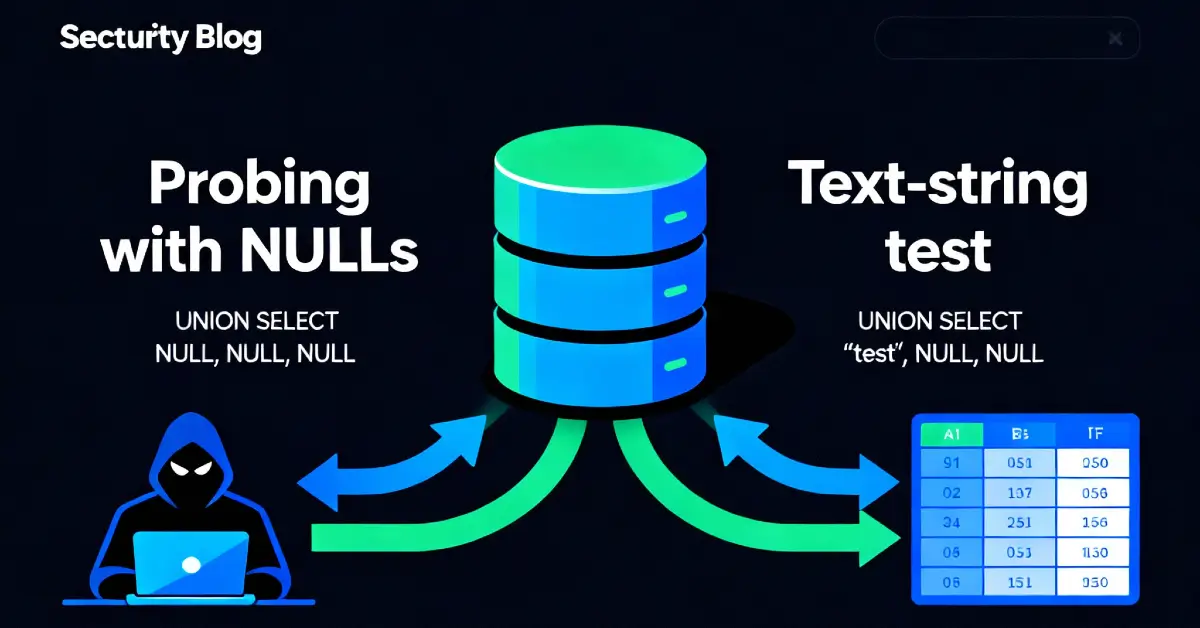

🔍 3. Vulnerability Discovery via AI Scanning

Offensive researchers now train ML models to identify vulnerable code patterns in repositories faster than any static analyzer.

AI can scan thousands of GitHub repos per hour, detecting secrets, tokens, misconfigurations, or injection flaws using learned patterns.

Some advanced setups even chain multiple AI models:

- One model fetches exposed code or endpoints

- Another evaluates for vulnerability likelihood

- A third crafts proof-of-concept payloads

That’s not science fiction. It’s automated recon on steroids.

Section 2 - The Defensive AI: The Rise of the Cybersecurity “Warrior”

Now to the good news - AI is not just making attackers smarter. It’s also redefining defensive strategy.

For security teams drowning in data, AI acts like an over-caffeinated analyst who never sleeps, never gets tired, and never misses a log entry.

🧠 1. AI-Powered SIEM and SOC Automation

Traditional Security Information and Event Management (SIEM) systems generate millions of alerts daily.

AI can help reduce the noise by learning what normal looks like for each environment.

Modern SIEM tools (like Splunk AI or Microsoft Sentinel) now use behavioral baselines to identify deviations rather than relying solely on rule-based triggers.

For example:

“User A normally logs in from Mumbai between 9 AM-6 PM.

Today, the same credentials accessed a system in Berlin at 2 AM.”

That’s anomaly detection - and AI is brilliant at it.

🛡️ 2. Threat Hunting and Incident Response

AI-driven platforms like CrowdStrike Falcon or Darktrace don’t just alert - they respond.

When an anomaly is detected, these systems can automatically:

- Quarantine suspicious endpoints

- Kill processes

- Block IP ranges

- Trigger predefined playbooks in SOAR tools

In essence, AI acts as a first responder, buying human analysts the critical minutes needed for deeper investigation.

🧩 3. Predictive Threat Intelligence

AI thrives on patterns. When trained on large threat datasets, it can predict where attacks might happen next.

For instance, AI models can correlate:

- Recent CVE exploitations

- Dark web chatter

- IOC overlaps between campaigns

This lets defenders anticipate attacks before they occur - a level of foresight that human-only analysis rarely achieves.

Section 3 - The Pentester’s Reality Check: Where AI Still Fails

This is where things get real.

Despite the marketing hype, AI is not (yet) the all-powerful cybersecurity guardian it’s often portrayed as.

From the perspective of a penetration tester, AI defense systems have blind spots - some of them dangerously large.

⚠️ 1. Adversarial Attacks: Fooling the AI

Attackers can poison AI models by subtly manipulating their input data.

Imagine a machine learning-based intrusion detection system that learns from historical logs.

If an attacker gradually introduces “benign-looking malicious activity” over time, the AI may start treating these actions as normal.

This is called model poisoning, and it’s already being used in offensive research labs.

Similarly, adversarial examples - tiny changes in inputs - can trick AI classifiers.

For example, a modified network packet might appear harmless to the AI but still trigger malicious behavior in real execution.

🧩 2. The “Unknown Unknowns” - Zero-Days

AI is great at recognizing patterns, but what happens when there’s no pattern to recognize?

Zero-days, by definition, are new and unseen. They exploit gaps that AI has never encountered in its training data.

So while AI might detect anomalies after-the-fact, it can’t predict novel exploit logic without human creativity and contextual reasoning.

🧮 3. Overfitting and False Positives

AI models can overfit - meaning they perform extremely well on known data but fail in the wild.

For SOC teams, that often means a flood of false positives, overwhelming analysts and eroding trust in AI recommendations.

In some organizations, teams end up disabling AI-based detections because they cause alert fatigue.

That defeats the whole purpose.

🔄 4. Lack of Contextual Understanding

Here’s something every pentester knows: context matters.

AI can tell you that something happened, but not why it matters.

Example:

AI flags a file upload to /uploads/debug.log.

It looks normal - but to a human, that log file contains JWT tokens.

No AI (today) truly understands intent - the difference between a normal operation and a strategic move in a kill chain.

That’s where human intuition still reigns supreme.

Section 4 - The Human-AI Alliance: A Practical Blueprint for Teams

The future isn’t “AI vs. humans.” It’s AI with humans.

AI handles the volume, while humans handle the value - the context, judgment, and creative response.

When integrated correctly, the two form a resilient defense strategy that learns, adapts, and improves together.

| Task Type | AI Strength | Human Strength | Ideal Workflow |

|---|---|---|---|

| Log Analysis & Event Correlation | Speed and pattern recognition | Prioritization and validation | AI triages → Human validates |

| Threat Detection | Behavioral baselining | Contextual reasoning | AI flags → Human interprets |

| Incident Response | Automation & speed | Strategic containment | AI executes playbooks → Human supervises |

| Threat Intelligence | Correlation across datasets | Understanding geopolitical & motive context | AI aggregates → Human analyzes |

| Pentesting | Pattern discovery | Creative exploitation | AI assists → Human leads |

This hybrid model is already being adopted by modern SOCs.

The key is not to fear automation - but to guide it responsibly.

Section 5 - A Pentester’s Vision: AI as the Next Layer of Security Maturity

As penetration testers, we constantly evolve to stay ahead of defensive technologies.

AI will not eliminate human hackers; it will reshape how we hunt, defend, and learn.

1. AI-Assisted Pentesting

Tools like Burp Suite extensions, ReconNG with ML models, or AI-assisted fuzzers already help testers discover patterns and weak points faster.

Soon, pentesters may use custom GPT agents trained on bug bounty archives to predict high-probability endpoints.

2. AI-Augmented Red Teaming

Imagine AI generating adaptive phishing templates that evolve in real-time, based on target behavior.

That’s not far-fetched - it’s already being tested in research environments.

3. AI-Aware Blue Teaming

Defensive teams must learn how to validate AI outputs, audit ML pipelines for poisoning, and understand how adversaries manipulate detection logic.

In short:

The next generation of ethical hackers must understand both machine learning and human manipulation.

That’s the new battlefield.

Section 6 - The Verdict: An Ally, Not a Replacement

AI isn’t a magical cybersecurity warrior waiting to save the world.

It’s an evolving apprentice - powerful, tireless, but still learning.

From a pentester’s lens, AI is a formidable ally that:

- Automates low-level analysis

- Detects patterns humans would miss

- Responds faster than any analyst could

But it also:

- Misses creative, multi-step exploits

- Can be fooled, poisoned, or overloaded

- Lacks ethical judgment and human intuition

The strongest cybersecurity defense in 2025 and beyond isn’t AI alone -

It’s humans and AI fighting side by side, each amplifying the other’s strengths.

Final Thoughts

As offensive security professionals, we should embrace AI not as competition but as collaboration.

Learn to test AI systems. Learn to exploit them safely.

And most importantly - learn to build trust in AI-driven defenses.

Because the hackers of the future won’t just exploit systems.

They’ll exploit models.

And the defenders who understand both code and cognition - those will be the real warriors of tomorrow.

References

- MITRE ATT&CK Framework

- OWASP AI Security & Privacy Guide

- ENISA: Artificial Intelligence in Security Operations

- Darktrace Whitepaper on AI in Threat Detection

- PortSwigger Research Blog: Adversarial AI in Web Security

Published on herish.me - where cybersecurity meets innovation and reality.