$4500 Local File Inclusion - single-parameter catastrophe exposing cloud infrastructure

A deep, defensive walkthrough of an LFI that allowed arbitrary local file reads via a misused download_url parameter.

Disclaimer

This write-up is strictly educational and defensive. All testing described was performed by the researcher on targets they were authorized to test. Do not attempt these techniques against systems you do not own or explicitly have permission to test. The goal of this article is to help developers and defenders recognize dangerous patterns, reproduce fixes in safe environments, and harden systems against similar mistakes.

Introduction

Small, seemingly benign features often cause the largest security problems. Download helpers, attachment proxies, and "fetch-and-stream" utilities are convenient developer primitives - but when they accept user-controlled input without strict validation, they can expose entire server file systems, secrets, and cloud credentials.

This post reconstructs a real, high-impact Local File Inclusion (LFI) finding that yielded a $4500 bounty. The vulnerable endpoint accepted a download_url parameter and the server fetched the target resource on behalf of the user. Because the server did not validate the URL scheme, path, or token binding, an authenticated researcher was able to retrieve arbitrary files (e.g., /etc/passwd, application config files, cloud credential blobs). The issue is a textbook example of "trust the helper, trust the user" gone wrong.

This walkthrough covers: the exact observation steps, how the researcher validated and escalated the finding safely, why the vulnerability occurred, remediation recommendations, and a defensive checklist to search for the same pattern in your estate.

Understanding the target

The target was a cloud-hosted service platform with a support-ticket system that allows users to attach and download files. Architecturally, the app used a server-side helper to fetch attachments and stream them to authenticated requesters. Key observations:

- Download requests used an action parameter

download_fileand included atokenanddownload_url. - Authentication was session/cookie or token based; only authenticated users could call this endpoint.

- The server fetched the resource server-side and returned the raw bytes to the requester (not a client-side redirect).

- No immediate scheme sanitization or token-to-file mapping was obvious from the client UI.

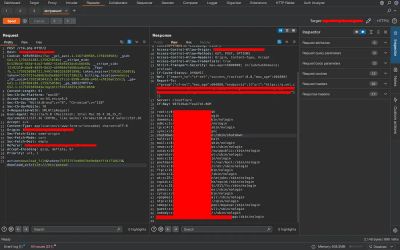

The researcher used an interception proxy (Burp Suite) and crafted safe, read-only requests. They avoided mass harvesting, destructiveness, and only requested files needed to demonstrate the vulnerability. All repro steps below are redacted for safety but preserve the technical detail defenders need.

Step 1 - Recon: mapping the download flow

The first task when you see download helpers is to determine where the content actually comes from.

Typical reconnaissance steps the researcher ran:

- Intercept a normal download request when clicking an attachment.

- Observe the POST/GET parameters and headers.

- Confirm the server is the one fetching the resource (look for proxy headers, absence of client redirects).

- Test trivial manipulations to infer validation logic.

A representative intercepted request looked like:

Key signals:

- The

download_urlparameter was fully user-controlled. - The flow used server-side fetching - the server performed the HTTP/S request to

download_urland streamed content back. - No client-side file mapping (e.g., token referenced to a server path) was visible in the UI.

With that mapped, the next step is to minimally probe for server-side acceptance of alternate schemes.

Step 2 - Low-cost validation: is the scheme checked?

A safe early test is to provide an obviously disallowed scheme (for legitimate downloads) and watch the response. The researcher tried file:// - a canonical way to test for local file acceptance.

Example test (only on authorized targets):

The server returned the contents of /etc/passwd (truncated in the logs for safety). The response pattern - HTTP 200 with raw file content - confirms server-side LFI via the fetch helper. That single observation converts a theory into a confirmed high-severity issue.

Important ethical note: the researcher limited reads to clearly public or non-sensitive system files to prove the pattern before safely reporting. After confirming /etc/passwd and behavior, they escalated responsibly to the vendor.

Step 3 - Safe exploitation paths and escalation (defensive-minded)

After validating that the server accepts file:// URIs, a responsible researcher should follow a minimal, principle-of-least-privilege path to demonstrate impact while minimizing risk.

Typical escalation steps (used defensively by the researcher):

- Read non-sensitive files to confirm patterns (e.g.,

/etc/passwd). - Attempt to access application config placeholders like

/.htaccessor public logs, not secret keys. - If the vendor requests reproduction details, provide exact request and headers with redacted secrets.

- Only after vendor acceptance, attempt targeted reads of environment/config files to demonstrate impact for remediation.

Example proof pattern (redacted and sanitized):

If the vendor wants confirmation, the researcher shares metadata: HTTP status, first lines of file (redacted), and reproduction steps - without publishing any secret contents publicly.

Step 4 - Why the helper pattern is dangerous

This is a frequently-seen structural problem:

Generic fetch helper anti-pattern

Developers often write a utility like:

And then call it across the codebase assuming callers only pass safe URIs. When that helper accepts any scheme (including file://, ftp://, gopher://, php://filter, etc.), it opens multiple attack classes:

- LFI (file://)

- SSRF (http/s to internal services)

- protocol abuse (gopher, file descriptors)

- PHP filters (php://filter for source disclosure)

Missing token-to-resource binding

The platform validated token for authentication but did not tie token to an allowed resource path. A robust design binds token → allowed path (or a short-lived pre-signed URL), preventing arbitrary URL substitution.

Lack of allowlist & canonicalization

There was no allowlist (e.g., https://cdn.example.com/attachments/) and no canonical path enforcement (e.g., serve only files under /data/attachments/). Without these guards, input normalization and strict canonical checks are required.

Step 5 - Real-world impact scenarios

An LFI of this type can enable catastrophic outcomes, depending on server layout:

- Cloud credential exposure: reading cloud provider credentials (e.g.,

~/.aws/credentials, Azure MSI tokens, GCP service account files) can provide immediate lateral movement into cloud infrastructure. - Source code leakage: reading

app/config,.env, or private repo tokens leads to secret exposure. - Full compromise: combining leaked credentials with accessible management endpoints may escalate to full account or infrastructure takeover.

- Compliance breach: exposure of logs or user data can trigger legal and regulator concerns (GDPR, HIPAA).

- Supply-chain risks: access to build artifacts or signing keys could enable artifact tampering.

The severity depends on what files are present and whether read data includes secrets - but the capability to read arbitrary files alone is critical.

Defensive fix checklist - how to remediate

If you maintain a server that implements fetch-and-stream helpers, apply the following prioritized mitigations:

- Scheme allowlist

Accept onlyhttps://and restrict domains to trusted CDNs or internal asset hosts. Rejectfile://,gopher://,ftp://, and any protocol not explicitly required. - Token-to-path mapping

Do not accept a user-supplied URL. Use a server-side mapping:attachment_id → canonical_path, or generate short-lived pre-signed URLs server-side. - Canonicalization & sandboxing

If you must accept paths, canonicalize and ensure the resulting path is inside an allowed directory. Fail closed if canonicalization fails. - Principle of least privilege

Ensure fetchers run with a restricted OS user and minimal file privileges. Even if LFI occurs, minimize exposed data. - Content-type and size restrictions

Validate expected content types and impose strict size limits on streamed downloads. - Audit & logging

Log unusual fetch targets and alert on attempts using dangerous schemes (file://, etc.). Use WAF rules to detect and block such attempts. - Code reviews and tests

Add unit tests asserting the fetch helper rejects disallowed schemes and that token-to-path mapping is enforced. - Defense in depth

Combine the above with runtime protections (SELinux, container restrictions), VPC firewall rules, and metadata service access controls.

Troubleshooting & common pitfalls

When applying fixes, teams commonly trip on these issues:

- Broken existing integrations: adding strict allowlists may break legitimate third-party integrations - plan a staged rollout and communicate clearly.

- Presigned URL misuse: if presigned URLs are too long-lived or unscoped, they can be leaked. Use tight TTLs and scope to specific resources.

- Path canonicalization errors: naive canonicalization can be bypassed with Unicode, null bytes, or encoding trickery. Use well-tested libraries for normalization.

- Assuming HTTPS equals safe: an attacker can still host malicious content on HTTPS. Domain allowlist is required in addition to scheme checks.

- Logging secrets: avoid logging full tokens or secret contents when reproducing fix attempts - redact before storing.

Final thoughts

The download_url helper was an innocuous convenience: a developer wanted the server to abstract fetching for clients. But convenience without constraint created a pathway for an attacker to read the server's filesystem. The lesson is simple but unforgiving:

Never assume a helper is used only by trusted callers. Validate its inputs as if they were from the public internet.

The vendor in this case implemented fixes and rewarded the researcher - the best outcome for everyone. That result underscores the value of responsible disclosure and defensive transparency.

References

- OWASP - Local File Inclusion (LFI) and related guidance

- CWE-98, CWE-22 - canonical vulnerability classifications

- Real-world coordinated disclosure and bug bounty best practices