Finding Hope (and $250) in a Forgotten Field: A Beginner Guide to Stored XSS Success

A real-world story of turning frustration into a $250 stored XSS win — and how beginners can replicate the mindset, methodology, and testing approach ethically.

Disclaimer: This story is for educational and defensive purposes only. The payloads and techniques discussed are shared to help developers secure their applications and to guide new ethical hackers toward responsible bug hunting. Do not test or exploit live sites without authorization. Always follow platform rules and coordinated disclosure policies.

Note: The sequence

{is the encoded version of the{character. Throughout this blog, whenever you see{, it represents{.

Introduction: When Hope Feels Lost in the Bug Bounty World

Every bug hunter hits that wall - months of “duplicate” or “not applicable” replies and zero payouts.

That’s exactly where our story begins.

After several fruitless hunts across dozens of web apps, this researcher nearly quit. No fancy tools, no secret invites - just a deep sense of frustration.

But one night, a simple decision to try one more random site led to a $250 bounty and a powerful lesson about perseverance, intuition, and the art of spotting overlooked vulnerabilities.

This post breaks down that journey - not as a “look what I did” brag, but as a technical and motivational roadmap for new hunters to replicate the mindset, process, and ethics behind turning rejection into success.

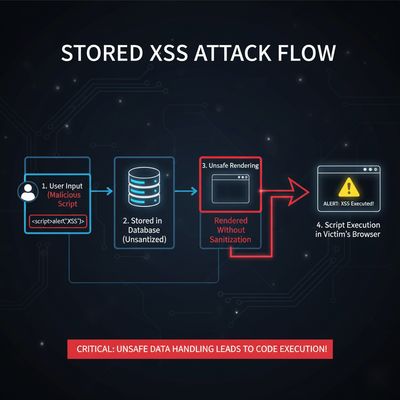

What Makes Stored XSS So Powerful (and So Common)

Before diving into the finding, it’s important to understand what makes Stored Cross-Site Scripting (XSS) special - and dangerous.

XSS allows attackers to inject malicious scripts into web pages viewed by other users. It comes in three main types:

| Type | Description | Example Trigger |

|---|---|---|

| Reflected XSS | Payload is reflected in the immediate response. | Malicious link → triggers alert instantly. |

| DOM-based XSS | Triggered via client-side JavaScript logic errors. | Changing location.hash executes injected code. |

| Stored XSS | Payload is saved on the server and executed whenever viewed. | Message boards, profile bios, or invoice templates. |

Stored XSS is often the most impactful because:

- The payload persists across sessions.

- Victims don’t need to click a link - just view a page.

- The impact scales: one stored payload can hit hundreds of users.

- It can lead to account takeover, data theft, or privilege escalation.

When a field accepts unfiltered HTML or JavaScript and renders it later in another user’s browser, that’s the XSS jackpot.

Step 1 - Exploring the Target Like a Detective, Not a Hacker

Instead of blasting automated scanners, the researcher began manually exploring. The target site looked normal: a simple business platform for creating customers and invoices.

That’s where curiosity beat automation. The mindset wasn’t “I’ll find XSS here” but “I’ll understand what the app does and how data flows.”

Key discovery:

A section called Settings → Custom invoice reference number format.

It displayed a tip:

“An

{{increment}}will be replaced by an incrementing integer.”

That tiny hint indicated template-like behavior, which is often a red flag for injection possibilities (SSTI or XSS).

Step 2 - First Tests: Playing with the Field Safely

To confirm behavior, the researcher entered small tests like:

and received an error message:

“Custom invoice numbers must include {{letter}}, {{number}}, or {{increment}}.”

So they tried again:

This time - it worked! The input was saved and reflected. That was enough to start controlled injection testing.

Step 3 - From Curiosity to Confirmation

They began by testing Server-Side Template Injection (SSTI), using harmless calculations:

No output or arithmetic change - meaning SSTI was not supported.

Next, they tested for HTML injection:

The preview displayed formatted text.

That’s the “Aha!” moment. HTML tags were rendered directly - no sanitization.

This is a classic XSS precursor.

So the final test:

Result? 💥

A popup - confirming Stored XSS.

Step 4 - Proving Real-World Impact (Safely)

Reporting just an alert(1) usually yields a “medium severity” tag. To raise the impact rating ethically, they demonstrated session hijacking without harming anyone.

A proof-of-concept payload like this can demonstrate potential risk:

When rendered in another user’s browser, this payload would send their session cookie to a controlled endpoint.

(Always use a controlled, private testing domain like interact.sh or webhook.site for demonstration purposes - never exploit production users.)

By showing that the stored payload could leak session cookies, the researcher proved a realistic account-takeover vector.

Step 5 - Reporting and the Long Wait

The report included:

- Steps to reproduce (clean, clear, and simple).

- Screenshots of the input field and stored alert.

- PoC showing cookie extraction.

- Explanation of potential impact (session hijack).

- Defensive recommendations: proper sanitization, CSP, and output escaping.

Then came the hard part - waiting.

No reply for weeks. No triage confirmation. Just silence.

Until one morning: PayPal email received - $250 reward.

The company silently patched the issue and thanked them for the disclosure.

Step 6 - The Technical Root Cause

Under the hood, the bug happened because:

- User input wasn’t sanitized before being stored in the database.

- When rendering templates, the backend didn’t escape special HTML characters.

- The UI used user-controlled content directly in a dynamic field.

Example of bad code:

Correct approach:

Adding a Content Security Policy (CSP) could further mitigate risk by blocking inline script execution.

Step 7 - How Developers Could Have Prevented This

XSS prevention boils down to one golden rule: Never trust user input.

Defensive checklist:

| Layer | Defensive Control |

|---|---|

| Input | Validate allowed characters or templates ({{}} placeholders only). |

| Storage | Store raw values, not rendered HTML. |

| Output | Escape HTML entities before rendering. |

| Framework | Use built-in encoding (e.g., Django’s {{ variable }} autoescape). |

| Policy | Add CSP: script-src 'self'; to block injected scripts. |

| Testing | Include Stored XSS test cases in QA automation. |

Step 8 - Lessons for Bug Hunters

- Rejection ≠ Failure.

Every “duplicate” or “informative” teaches pattern recognition. The next time you see a similar pattern, you’ll move faster. - Think Like a Developer.

Don’t attack blindly. Understand logic, templating, and what developers intend to do - then test where assumptions break. - Persistence Pays.

The researcher almost gave up. That random 2 AM decision to test a new site was the turning point. - Prove Impact Responsibly.

XSS isn’t about making an alert pop up - it’s about demonstrating real risk safely. - Document Everything.

Screenshots, reproduction steps, and suggested fixes show professionalism - and increase your reward odds.

Step 9 - The Human Side: From Burnout to Breakthrough

It’s easy to lose motivation in bug bounty. Most hunters see flashy posts - $10,000 RCE chains, exotic SSRF bypasses - and feel discouraged.

But remember: every expert was once frustrated.

Persistence and curiosity are more important than any tool.

The researcher didn’t find this bug with a fancy scanner or plugin. They found it with observation, patience, and basic browser testing. That’s real skill.

Step 10 - Practical Guide: How You Can Find Similar Bugs

Here’s a step-by-step plan for beginners who want to replicate this success (ethically):

Step 1: Pick a realistic target

Choose a small, public web app or practice lab (like PortSwigger’s XSS labs or OWASP Juice Shop).

Step 2: Explore deeply

Open every editable field - bios, templates, settings, message forms, invoice builders.

Step 3: Start with harmless probes

Inject small HTML elements like <b> or <i> first.

If they render, you may have unsanitized input.

Step 4: Gradually escalate

Test <img src=x onerror=alert(1)>, <svg onload=alert(1)>, or encoded payloads.

Step 5: Check storage

Reload the page or log in as another user to see if the payload persists.

If it does - it’s stored.

Step 6: Prove safely

Show that your code executes - without exfiltrating data. Demonstrate risk conceptually.

Step 7: Report with professionalism

Follow the program’s disclosure policy, provide clean steps, and explain impact clearly.

Step 11 - Example Professional Report Template

Title: Stored Cross-Site Scripting in Custom Invoice Reference Field

Summary:

The application fails to sanitize user input in the “Custom invoice reference format” field, allowing stored JavaScript execution for other users.

Steps to reproduce:

-

Go to Settings → Invoice Reference Format.

-

Enter the payload:

-

Save changes.

-

Visit the invoice creation page - the alert executes.

Impact:

- Stored XSS executes for all users viewing invoices.

- Attackers could steal sessions or perform actions as victims.

Suggested Fix:

- Escape HTML before rendering.

- Implement CSP headers.

- Sanitize user input and restrict dangerous characters.

Step 12 - Real-World XSS Mitigation Strategies (Defensive Angle)

Even mature teams get caught off-guard by XSS in uncommon fields like templates, reference numbers, or error pages.

Top defensive moves:

- Contextual escaping - use libraries like DOMPurify for user-generated HTML.

- Output encoding - never write raw input to DOM or innerHTML.

- Security headers - deploy CSP, X-XSS-Protection (where relevant), and Referrer-Policy.

- Code reviews - add “user input rendering” checks to your code review checklist.

- Automated scanning - integrate XSS tests in CI/CD pipelines.

These actions not only prevent stored XSS but also raise the app’s overall security maturity.

Step 13 - Motivation Corner: Your $250 Moment Is Waiting

The takeaway isn’t “this person got lucky.”

It’s “persistence + curiosity + ethics = success.”

That one late-night click, that one overlooked input, can change your confidence - and your career.

If you’re a beginner bug hunter reading this, don’t underestimate the power of small wins.

$250 isn’t just money - it’s validation that your skills matter.

Keep practicing, stay humble, and focus on learning rather than chasing payouts. The money follows the mastery.

References

- OWASP XSS Prevention Cheat Sheet

- PortSwigger Web Security Academy - XSS Learning Path

- Mozilla Developer Docs: Content Security Policy (CSP)

- DOMPurify Library for Sanitization

Published on herish.me - where real-world bug-bounty stories meet practical security education.